Workflow:¶

- Problem statement

- Data collectio (DB, Server, Cloud, csv, xlsx, webscrapping)

- Data Inspection

- Data Cleaning

- Exploratory Data Analysis

- Feature Engineering(Encoding)

- Train-Test split

- Model Training

- Dodel Evaluation

- Hyperparameter Tuning

- Deployment

Local Machine -----Docker (CI/CD Pipeline with Git ----Cloud

- Local machine - write our codes

- cloud - where we deploy our codes to run 24/7

- Pipeline - to flow /pathway

- GIT - Changes will be reflected automatically

- Docker - Transportation of our codes

Collective Inspection¶

Individual Inspection¶

Visual Inspection¶

Conventional method to clean the data¶

- Dropping the Irrelevant columns

- Hadling Missing values

- Handling the duplicate data

- Converting Data types

- Outlier detection & removal

- Fixing the Inconsistent Data

- Dealing with Time Related columns

# This Python 3 environment comes with many helpful analytics libraries installed

# It is defined by the kaggle/python Docker image: https://github.com/kaggle/docker-python

# For example, here's several helpful packages to load

import numpy as np # linear algebra

import pandas as pd # data processing, CSV file I/O (e.g. pd.read_csv)

# Input data files are available in the read-only "../input/" directory

# For example, running this (by clicking run or pressing Shift+Enter) will list all files under the input directory

import os

for dirname, _, filenames in os.walk('/kaggle/input'):

for filename in filenames:

print(os.path.join(dirname, filename))

# You can write up to 20GB to the current directory (/kaggle/working/) that gets preserved as output when you create a version using "Save & Run All"

# You can also write temporary files to /kaggle/temp/, but they won't be saved outside of the current session/kaggle/input/fraudtest/fraudTest.csv

# 🧹 This line removes JupyterLab (a tool that helps run notebooks),

# because we don't need it here and it can sometimes cause errors.

!pip uninstall -qy jupyterlab

# 🚀 This line installs the Google Generative AI Python library (google-genai)

# The version we're installing is 1.7.0 — it's like downloading the latest brain

# that helps our code talk to Google's smart Gemini robot!

!pip install -U -q "google-genai==1.7.0"

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 144.7/144.7 kB 7.1 MB/s eta 0:00:00

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 100.9/100.9 kB 5.0 MB/s eta 0:00:00

# We are using special tools from Google to talk to the Gemini AI

from google import genai

# We are importing extra tools (called "types") to help talk to Gemini in a smart way

from google.genai import types

# These tools help us show pretty text and output (like bold, colors, or math) in Jupyter Notebooks

from IPython.display import HTML, Markdown, display

# We import something called `retry` from Google's API tools.

# It helps us automatically try again if something goes wrong (like the internet is slow).

from google.api_core import retry

# This line says: "If there's an error, check if it's an API error AND the code is 429 or 503"

# - 429 means: "Too many requests, slow down!"

# - 503 means: "Server is too busy or not ready!"

# So we want to retry only if it's one of these errors.

is_retriable = lambda e: (isinstance(e, genai.errors.APIError) and e.code in {429, 503})

# Now we take Gemini’s generate_content function and wrap it with a retry feature.

# That means: if Gemini is too busy (429/503), it will try again automatically instead of giving up.

genai.models.Models.generate_content = retry.Retry(

predicate=is_retriable # This tells it which errors to retry

)(genai.models.Models.generate_content) # This is the actual function we're retrying

# This line imports a special helper from Kaggle that lets us get secret info (like passwords or keys)

from kaggle_secrets import UserSecretsClient

# This line gets our super-secret Google API key from Kaggle's safe storage.

# It's like opening a locked treasure chest and grabbing the special key we need to talk to Gemini!

GOOGLE_API_KEY = UserSecretsClient().get_secret("GOOGLE_API_KEY")

# First, we make a connection to the smart Gemini robot by using our secret API key.

# This is like saying "Hi Gemini! Here's my key, can I ask you something?"

client = genai.Client(api_key = GOOGLE_API_KEY)

# Now we ask Gemini a question using the model "gemini-2.0-flash".

# We give it some text (our question) and ask it to explain black holes in a simple way.

response = client.models.generate_content(

model = "gemini-2.0-flash", # This is the brain we're using (a fast version!)

contents = "Explain about the Black holes to me like I'm a kid in a single line" # Our question

)

# We print the answer that Gemini gives us.

# This will show up in our screen like a fun explanation from a smart robot friend.

print(response.text)

Black holes are like super-strong vacuum cleaners in space that suck up everything, even light!

Markdown(response.text)Loading...

display(response.text)'Black holes are like super-strong vacuum cleaners in space that suck up everything, even light!\n'client = genai.Client(api_key=GOOGLE_API_KEY)

response = client.models.generate_content(

model = "gemini-2.0-flash",

contents = "Explain about Singularity briefly about 2 lines"

)

print(response.text)Singularity is a container platform focused on enabling portability and reproducibility in high-performance computing (HPC) and scientific workflows. It allows users to package entire software environments into a single, portable file, making it easier to run applications consistently across different systems.

# This line starts a new chat with the Gemini robot.

# We tell it which version to use ('gemini-2.0-flash') and start with an empty history.

chat = client.chats.create(model='gemini-2.0-flash', history=[])

# Now we send a message to the Gemini robot, like saying "Hi!"

# The robot will think and answer back.

response = chat.send_message('Hello!, My name is Rajesh Karra')

# Finally, we print the robot's reply so we can read it.

print(response.text)

Hello Rajesh! It's nice to meet you. How can I help you today?

response = chat.send_message('Can you explain me about how the warmholes created in just about 30 words')

print(response.text)Wormholes are theoretical tunnels through spacetime, created by extreme warping of space, potentially connecting distant points or even different universes. They require exotic matter with negative mass-energy density to remain stable.

response = chat.send_message('Do you remember what my name is')

print(response.text)Yes, your name is Rajesh Karra.

client = genai.Client(api_key = GOOGLE_API_KEY)

response = client.models.generate_content(

model = "gemini-2.0-flash",

contents = "Causes of smell from the mouth, whether it is because of Vitamin defeciency or food"

)

print(response.text)

#Markdown(response.text)Okay, let's break down the causes of bad breath (halitosis) and how they relate to vitamin deficiencies and food.

**I. Major Causes of Bad Breath (Halitosis):**

* **Oral Hygiene:** This is the BIGGEST and most common culprit.

* **Plaque and Bacteria:** Bacteria naturally live in your mouth. When you don't brush and floss regularly, these bacteria feed on food particles and dead cells, producing volatile sulfur compounds (VSCs) as a byproduct. VSCs are what cause the foul odor. The back of the tongue is a particularly problematic area, as it has a rough surface that traps bacteria and debris.

* **Food Particles:** Trapped food particles decompose and contribute to bad breath.

* **Dental Problems:** Cavities, gum disease (gingivitis and periodontitis), and poorly fitting dentures can all harbor bacteria and food, leading to bad breath.

* **Dry Mouth (Xerostomia):** Saliva helps to cleanse the mouth by washing away food particles and bacteria. When saliva production is reduced, bacteria thrive, leading to bad breath. Dry mouth can be caused by medications, certain medical conditions (e.g., Sjogren's syndrome), or simply breathing through your mouth.

* **Food:**

* **Strong-Smelling Foods:** Garlic, onions, spices (like curry), and some cheeses contain compounds that are absorbed into the bloodstream and exhaled through the lungs, causing temporary bad breath.

* **High-Protein Diets:** Diets very high in protein and low in carbohydrates can sometimes lead to ketosis, a metabolic state where the body burns fat for energy. Ketosis can produce a distinctive, sometimes fruity, odor on the breath.

* **Medical Conditions:**

* **Sinus Infections:** Postnasal drip provides a food source for bacteria.

* **Respiratory Infections:** Bronchitis, pneumonia, and other respiratory infections can produce foul-smelling mucus.

* **Kidney Disease:** Kidney failure can lead to a buildup of toxins in the body, which can cause a characteristic ammonia-like odor on the breath.

* **Liver Disease:** Severe liver disease can sometimes cause a musty or sweet odor on the breath.

* **Diabetes:** Uncontrolled diabetes can lead to diabetic ketoacidosis (DKA), which can cause a fruity or acetone-like odor on the breath.

* **Gastroesophageal Reflux Disease (GERD):** Stomach acid and undigested food can reflux into the esophagus and mouth, causing bad breath.

* **Other Conditions:** Some rare metabolic disorders can also cause distinctive breath odors.

* **Tobacco Use:**

* Smoking and chewing tobacco dry out the mouth, irritate the gums, and leave behind a distinct odor. They also increase the risk of gum disease.

* **Medications:**

* Some medications can cause dry mouth as a side effect. Others may release chemicals that are exhaled and contribute to bad breath.

**II. Vitamin Deficiencies and Bad Breath:**

While not a direct *cause* of bad breath, certain vitamin deficiencies can contribute to conditions that *lead* to it:

* **Vitamin C Deficiency (Scurvy):** Scurvy weakens connective tissues, including those in the gums. This can lead to bleeding gums, which are more susceptible to infection and bacterial growth, resulting in bad breath. Vitamin C is also important for wound healing, and deficiency can exacerbate gum disease.

* **Vitamin D Deficiency:** Emerging research suggests a link between vitamin D deficiency and periodontal disease (gum disease). Vitamin D plays a role in immune function and bone health, and its deficiency may worsen inflammation in the gums, contributing to bad breath.

* **B Vitamin Deficiencies (Especially B3 - Niacin):** In severe cases, Niacin deficiencies cause the disease Pellagra. A symptom of this disease is a bright red tongue, and mouth ulcers. All of these symptoms encourage bacterial growth, and lead to bad breath.

**Important Considerations about Vitamin Deficiencies:**

* **Rarity:** Significant vitamin deficiencies are relatively uncommon in developed countries where people generally have access to a varied diet.

* **Indirect Impact:** Vitamin deficiencies are more likely to *contribute* to bad breath by weakening the gums or affecting overall health than to be the sole cause.

* **Other Symptoms:** If you suspect a vitamin deficiency, you'll likely experience other symptoms besides bad breath, such as fatigue, weakness, skin problems, or neurological issues. Consult a doctor for proper diagnosis and treatment.

**III. How Food Directly Causes Bad Breath:**

* **Specific Foods:**

* **Garlic and Onions:** These contain sulfur compounds that are absorbed into the bloodstream and released through the lungs. The odor can linger for several hours, even after brushing.

* **Sugary Foods:** Sugar feeds bacteria in the mouth, leading to acid production and bad breath.

* **Coffee:** Coffee can contribute to dry mouth, creating a more favorable environment for bacteria. It can also leave a residue in the mouth.

* **Alcohol:** Alcohol also leads to dry mouth, which encourages bacteria growth.

* **Decomposition:** Food particles left in the mouth decompose and release foul-smelling compounds.

**IV. What to Do About Bad Breath:**

1. **Practice Excellent Oral Hygiene:**

* Brush your teeth thoroughly at least twice a day, especially after meals. Use fluoride toothpaste.

* Floss daily to remove plaque and food particles from between your teeth.

* Scrape your tongue daily with a tongue scraper to remove bacteria and debris.

* Clean dentures properly and regularly.

2. **Stay Hydrated:** Drink plenty of water to keep your mouth moist and wash away food particles.

3. **Chew Sugar-Free Gum:** Chewing gum stimulates saliva production, which helps cleanse the mouth.

4. **Rinse with Mouthwash:** Use an antibacterial mouthwash to kill bacteria and freshen your breath.

5. **See Your Dentist Regularly:** Get regular checkups and cleanings to detect and treat dental problems.

6. **Address Underlying Medical Conditions:** If you suspect a medical condition is causing your bad breath, see your doctor for diagnosis and treatment.

7. **Dietary Changes:** Limit strong-smelling foods like garlic and onions. Reduce your intake of sugary foods and drinks.

8. **Quit Smoking:** Smoking is a major contributor to bad breath and other health problems.

9. **Consider a Probiotic:** Some studies suggest that certain probiotics can help reduce bad breath by altering the balance of bacteria in the mouth.

10. **Assess Vitamin Levels:** If you have other symptoms that suggest vitamin deficiency, see a doctor and request bloodwork for assessment.

**In summary:** While vitamin deficiencies *can* contribute to conditions that worsen bad breath (particularly through their impact on gum health), the most common causes are related to oral hygiene, diet, and underlying medical conditions. If you're concerned about bad breath, focus on improving your oral hygiene, addressing any dietary issues, and seeing your dentist regularly. If the problem persists, consult your doctor to rule out any underlying medical conditions or vitamin deficiencies.

# This line goes through all the models that the Gemini client knows about

for model in client.models.list():

# For each model, we print its name so we can see which ones we can use

print(model.name)

models/embedding-gecko-001

models/gemini-1.0-pro-vision-latest

models/gemini-pro-vision

models/gemini-1.5-pro-latest

models/gemini-1.5-pro-001

models/gemini-1.5-pro-002

models/gemini-1.5-pro

models/gemini-1.5-flash-latest

models/gemini-1.5-flash-001

models/gemini-1.5-flash-001-tuning

models/gemini-1.5-flash

models/gemini-1.5-flash-002

models/gemini-1.5-flash-8b

models/gemini-1.5-flash-8b-001

models/gemini-1.5-flash-8b-latest

models/gemini-1.5-flash-8b-exp-0827

models/gemini-1.5-flash-8b-exp-0924

models/gemini-2.5-pro-exp-03-25

models/gemini-2.5-pro-preview-03-25

models/gemini-2.5-flash-preview-04-17

models/gemini-2.5-flash-preview-05-20

models/gemini-2.5-flash-preview-04-17-thinking

models/gemini-2.5-pro-preview-05-06

models/gemini-2.5-pro-preview-06-05

models/gemini-2.0-flash-exp

models/gemini-2.0-flash

models/gemini-2.0-flash-001

models/gemini-2.0-flash-exp-image-generation

models/gemini-2.0-flash-lite-001

models/gemini-2.0-flash-lite

models/gemini-2.0-flash-preview-image-generation

models/gemini-2.0-flash-lite-preview-02-05

models/gemini-2.0-flash-lite-preview

models/gemini-2.0-pro-exp

models/gemini-2.0-pro-exp-02-05

models/gemini-exp-1206

models/gemini-2.0-flash-thinking-exp-01-21

models/gemini-2.0-flash-thinking-exp

models/gemini-2.0-flash-thinking-exp-1219

models/gemini-2.5-flash-preview-tts

models/gemini-2.5-pro-preview-tts

models/learnlm-2.0-flash-experimental

models/gemma-3-1b-it

models/gemma-3-4b-it

models/gemma-3-12b-it

models/gemma-3-27b-it

models/gemma-3n-e4b-it

models/embedding-001

models/text-embedding-004

models/gemini-embedding-exp-03-07

models/gemini-embedding-exp

models/aqa

models/imagen-3.0-generate-002

models/veo-2.0-generate-001

models/gemini-2.5-flash-preview-native-audio-dialog

models/gemini-2.5-flash-preview-native-audio-dialog-rai-v3

models/gemini-2.5-flash-exp-native-audio-thinking-dialog

models/gemini-2.0-flash-live-001

from pprint import pprint # This helps print things in a neat and easy-to-read way

# We go through all the AI models available using the Gemini API

for model in client.models.list():

# We check if the model's name is "gemini-2.0-flash" (this is the one we want to use)

if model.name == 'models/gemini-2.0-flash':

# If we found it, we print out all its details nicely (like how it works, its version, etc.)

pprint(model.to_json_dict())

# Stop the loop — we found what we were looking for!

break

{'description': 'Gemini 2.0 Flash',

'display_name': 'Gemini 2.0 Flash',

'input_token_limit': 1048576,

'name': 'models/gemini-2.0-flash',

'output_token_limit': 8192,

'supported_actions': ['generateContent',

'countTokens',

'createCachedContent',

'batchGenerateContent'],

'tuned_model_info': {},

'version': '2.0'}

# We import something called "types" from Google's genai tools.

# These help us set up rules for how the Gemini model should respond.

from google.genai import types

# We are creating a setting (called config) that says:

# "Give me no more than 200 words (tokens) in the answer."

short_config = types.GenerateContentConfig(max_output_tokens = 200)

# Now we ask Gemini to do something for us!

# We tell it:

# - Which model to use: "gemini-2.0-flash" (this is a fast and smart AI)

# - What settings to follow (short_config)

# - What question or task to answer: "Write a 100 word essay on Einstein's E=MC^2"

response = client.models.generate_content(

model = "gemini-2.0-flash",

config = short_config,

contents = "Write a 100 word essay on the Einstein's E=MC^2"

)

# Finally, we print out what Gemini said (its answer!)

print(response.text)

Einstein's E=mc², a cornerstone of modern physics, expresses the fundamental equivalence of energy (E) and mass (m). The equation reveals that mass can be converted into energy and vice versa, with 'c' representing the speed of light, a colossal constant. This seemingly simple formula has profound implications, explaining the immense power unleashed in nuclear reactions like those within stars and atomic bombs. E=mc² demonstrated that a tiny amount of mass contains an enormous amount of energy. This revolutionary concept reshaped our understanding of the universe and paved the way for technological advancements in fields like nuclear power and medicine.

response = chat.send_message('Do you remember my name')

print(response.text)Yes, I remember your name is Rajesh Karra.

# First, we create a "config" that controls how random the AI's answers are.

# A higher temperature like 2.0 makes the answers more creative and surprising!

high_temp_config = types.GenerateContentConfig(temperature=2.0)

# Now we repeat the next part 5 times

for _ in range(5):

# We ask the Gemini model (the smart robot) to give us a random color name

# We give it our "high randomness" config so it gives different answers

response = client.models.generate_content(

model="gemini-2.0-flash", # This tells it which version of Gemini to use

config=high_temp_config, # This is how random/creative it should be

contents="Pick a random color...(respond in a single word)" # This is our question

)

# If we got an answer from Gemini...

if response.text:

# Print the answer and a row of dashes to separate the outputs

print(response.text, '-' * 25)

Turquoise

-------------------------

Emerald

-------------------------

Azure

-------------------------

Magenta.

-------------------------

Azure

-------------------------

response = chat.send_message('Do you rememeber my name')

print(response.text)Yes, I remember your name is Rajesh Karra. I'm programmed to remember information within a conversation.

# This line sets up how "creative" or "random" Gemini should be.

# Temperature = 0.0 means: "Be very serious and give the same answer every time"

low_temp_config = types.GenerateContentConfig(temperature = 0.0)

# We are going to ask Gemini the same question 5 times

for _ in range(5):

# We ask Gemini: "Pick a random color... (just one word)"

# But because the temperature is low, it may not be very random

response = client.models.generate_content(

model = "gemini-2.0-flash", # This is the name of the Gemini model we are using

config = low_temp_config, # We tell Gemini to use the serious (low-temp) setting

contents = 'Pick a random color...(respond in a single word)' # This is the question we ask

)

# If Gemini gave us an answer, we print it

if response.text:

print(response.text, '-' * 25) # We also print some dashes to separate each answer

Azure

-------------------------

Azure

-------------------------

Azure

-------------------------

Azure

-------------------------

Azure

-------------------------

# First, we are setting how the model should behave using settings

# temperature = how creative Gemini should be (higher = more random and fun!)

# top_p = helps pick the best words (like choosing from a smart list)

model_config = types.GenerateContentConfig(

temperature = 1.0, # Full creativity

top_p = 0.95 # Pick from the top 95% of best next words

)

# Now we give Gemini a fun task!

# We're pretending it's a creative writer and asking it to write a story

story_prompt = "you are a creative writer. Write a short story about a cat who goes on an adventure"

# This is where we ask Gemini to do the job

# We send our prompt and settings to Gemini, and it gives us a story

response = client.models.generate_content(

model = "gemini-2.0-flash", # We're using the fast Gemini model

config = model_config, # Use the creative settings we picked

contents = story_prompt # This is our prompt (what we want Gemini to write)

)

# Finally, we print out the story Gemini created!

print(response.text)

Jasper, a marmalade tabby of discerning tastes and dramatic flair, considered his life rather…beige. Sure, his humans, Mildred and Bartholomew, provided him with the finest salmon pate and the fluffiest cushions. But the world beyond the window, ah, that was a symphony of unexplored scents, unheard melodies, and un-chased butterflies.

One particularly dull Tuesday, as Bartholomew snored through the BBC news, Jasper decided enough was enough. He'd had it with predictability. He was destined for adventure! He stretched dramatically, extended a claw, and carefully manipulated the latch on the back door, a feat he’d practiced for weeks.

Freedom! The air, thick with the aroma of damp earth and burgeoning roses, hit him like a velvet paw. He stalked into the long grass, a tiny tiger in a miniature jungle. The neighborhood, usually viewed from the safe confines of his windowsill, was a riot of sensory overload.

He first encountered Agnes, a fluffy Persian with a voice like sandpaper, reigning over her prize-winning geraniums. "Well, well, well," she rasped, "look what the cat dragged in. Mildred's precious boy! Out for a wander, are we?" Jasper, unimpressed, simply twitched his tail and continued his expedition.

Next, he found himself face-to-face with a squirrel, Mr. Nutsy, who chattered furiously, his tail a blur of indignation. Jasper, usually content to watch Mr. Nutsy from afar, felt a surge of primal instinct. He crouched, his muscles coiled, ready to pounce. But then, Mr. Nutsy dropped a perfect, glistening acorn at Jasper's paws. Jasper, confused, blinked. Was this…tribute? He sniffed the acorn, then, feeling strangely awkward, nudged it back towards the squirrel.

As the sun began to dip below the horizon, painting the sky in hues of orange and purple, Jasper found himself by the creek. He'd never seen so much water in one place! He cautiously dipped a paw, recoiling with a shiver. Suddenly, he heard a faint meow.

Hidden beneath a willow tree was a tiny, shivering kitten, no bigger than Jasper's paw. Its fur was matted and its eyes were wide with fear. Jasper, the pampered house cat, felt a pang of something unfamiliar – compassion.

He nuzzled the kitten, purring softly, a sound he usually reserved for Mildred's lap. He stayed with the kitten, sharing what little warmth he could offer, until finally, the small creature stopped trembling.

As the stars began to twinkle, Jasper knew he couldn't stay out all night. Mildred and Bartholomew would be worried. He nudged the kitten one last time, then turned and began the trek back home, his adventure unexpectedly cut short.

He slipped back into the house, the door still ajar. Mildred, frantic, scooped him up in a hug, burying her face in his fur. "Jasper! Where have you been?"

As he purred, nestled in Mildred's arms, Jasper realized that adventure wasn't about conquering unknown territories or chasing squirrels. It was about experiencing the world, connecting with others, and maybe, just maybe, making a difference, however small. And that, he decided, was an adventure worth having. He secretly planned to bring back some salmon pate to that little kitten tomorrow.

# 🧠 This block sets how the Gemini model should behave

# Think of it like telling the AI:

# "Be calm (low temperature), give full answers (top_p), and don’t talk too much (limit tokens)"

model_config = types.GenerateContentConfig(

temperature = 0.1, # Low temperature = more predictable answers

top_p = 1, # Top-p = allow full creativity range (1 = use all words)

max_output_tokens = 5, # Only return up to 5 words in the answer

)

# 📝 This is the message (prompt) we send to Gemini

# We’re asking it to guess the emotion of a movie review (Positive, Neutral, or Negative)

zero_shot_prompt = """Classify movie reviews as POSITIVE, NEUTRAL or NEGATIVE.

Review: "Her" is a disturbing study revealing the direction

humanity is headed if AI is allowed to keep evolving,

unchecked. I wish there were more movies like this masterpiece.

Sentiment: """ # 👈 We're asking Gemini to complete this line with one word: POSITIVE, NEUTRAL, or NEGATIVE

# 🤖 Now we send the prompt to Gemini to get an answer

response = client.models.generate_content(

model = "gemini-2.0-flash", # Use the fast Gemini model

config = model_config, # Tell it how to behave (from above)

contents = zero_shot_prompt # Give it the question we want answered

)

# 📢 Show Gemini's answer on the screen

print(response.text)

POSITIVE

# We import a special tool called 'enum' that helps us make a list of choices

import enum

# We make a class (like a labeled box) called Sentiment

# Inside, we list 3 possible feelings (or moods):

# - POSITIVE: means happy or good

# - NEUTRAL: means okay, not good or bad

# - NEGATIVE: means sad or bad

class Sentiment(enum.Enum):

POSITIVE = 'positive'

NEUTRAL = 'neutral'

NEGATIVE = 'negative'

# We ask the Gemini robot to give us a response based on some input (zero_shot_prompt)

# We tell it:

# - Use the "gemini-2.0-flash" model

# - Expect the answer to be one of the moods (Sentiment)

# - Send the question we want to ask (zero_shot_prompt)

response = client.models.generate_content(

model = "gemini-2.0-flash",

config = types.GenerateContentConfig(

response_mime_type = "text/x.enum", # This says: "Send the answer in one of our enum moods"

response_schema = Sentiment # This tells Gemini to use our Sentiment choices

),

contents = zero_shot_prompt # This is the actual thing we ask Gemini to think about

)

# Print the answer Gemini gives us!

print(response.text)

positive

enum_response = response.parsed

print(enum_response)

print(type(enum_response))Sentiment.POSITIVE

<enum 'Sentiment'>

help(response.parsed)Help on Sentiment in module __main__ object:

class Sentiment(enum.Enum)

| Sentiment(value, names=None, *, module=None, qualname=None, type=None, start=1)

|

| An enumeration.

|

| Method resolution order:

| Sentiment

| enum.Enum

| builtins.object

|

| Data and other attributes defined here:

|

| NEGATIVE = <Sentiment.NEGATIVE: 'negative'>

|

| NEUTRAL = <Sentiment.NEUTRAL: 'neutral'>

|

| POSITIVE = <Sentiment.POSITIVE: 'positive'>

|

| ----------------------------------------------------------------------

| Data descriptors inherited from enum.Enum:

|

| name

| The name of the Enum member.

|

| value

| The value of the Enum member.

|

| ----------------------------------------------------------------------

| Readonly properties inherited from enum.EnumMeta:

|

| __members__

| Returns a mapping of member name->value.

|

| This mapping lists all enum members, including aliases. Note that this

| is a read-only view of the internal mapping.

few_shot_prompt = """Parse a customer's pizza order into valid JSON:

EXAMPLE:

I want a small pizza with cheese, tomato sauce, and pepperoni.

JSON Response:

```

{

"size": "small",

"type": "normal",

"ingredients": ["cheese", "tomato sauce", "pepperoni"]

}

```

EXAMPLE:

Can I get a large pizza with tomato sauce, basil and mozzarella

JSON Response:

```

{

"size": "large",

"type": "normal",

"ingredients": ["tomato sauce", "basil", "mozzarella"]

}

```

ORDER:

"""

# 🗣️ This is what the customer actually says

customer_order = "Give me a large with cheese & pineapple"

# 🤖 We now ask Gemini AI to help us figure out what this order means in code (JSON)

response = client.models.generate_content(

model = "gemini-2.0-flash", # 🧠 We're using Gemini Flash, a fast AI model

config = types.GenerateContentConfig(

temperature = 0.1, # 🎯 Low temperature = more accurate/serious answers

top_p = 1, # 📊 Controls randomness; 1 = normal

max_output_tokens = 250, # 📏 Max length of the answer

),

# 📦 We send both our instructions (examples) and the customer order

contents = [few_shot_prompt, customer_order]

)

# 🖨️ Finally, we print what Gemini answered!

print(response.text)```json

{

"size": "large",

"type": "normal",

"ingredients": ["cheese", "pineapple"]

}

```

Collective and Individual Inspection of a dataset¶

!pip install kagglehubRequirement already satisfied: kagglehub in /usr/local/lib/python3.10/dist-packages (0.3.9)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from kagglehub) (24.2)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from kagglehub) (6.0.2)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from kagglehub) (2.32.3)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from kagglehub) (4.67.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (2025.1.31)

!pip install --upgrade kagglehub[pandas-datasets]

Requirement already satisfied: kagglehub[pandas-datasets] in /usr/local/lib/python3.10/dist-packages (0.3.9)

Collecting kagglehub[pandas-datasets]

Downloading kagglehub-0.3.12-py3-none-any.whl.metadata (38 kB)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (24.2)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (6.0.2)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (2.32.3)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (4.67.1)

Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (2.2.3)

Requirement already satisfied: numpy>=1.22.4 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (1.26.4)

Requirement already satisfied: python-dateutil>=2.8.2 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (2025.1)

Requirement already satisfied: tzdata>=2022.7 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (2025.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (2025.1.31)

Requirement already satisfied: mkl_fft in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (1.3.8)

Requirement already satisfied: mkl_random in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (1.2.4)

Requirement already satisfied: mkl_umath in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (0.1.1)

Requirement already satisfied: mkl in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2025.0.1)

Requirement already satisfied: tbb4py in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2022.0.0)

Requirement already satisfied: mkl-service in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2.4.1)

Requirement already satisfied: six>=1.5 in /usr/local/lib/python3.10/dist-packages (from python-dateutil>=2.8.2->pandas->kagglehub[pandas-datasets]) (1.17.0)

Requirement already satisfied: intel-openmp>=2024 in /usr/local/lib/python3.10/dist-packages (from mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2024.2.0)

Requirement already satisfied: tbb==2022.* in /usr/local/lib/python3.10/dist-packages (from mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2022.0.0)

Requirement already satisfied: tcmlib==1.* in /usr/local/lib/python3.10/dist-packages (from tbb==2022.*->mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (1.2.0)

Requirement already satisfied: intel-cmplr-lib-rt in /usr/local/lib/python3.10/dist-packages (from mkl_umath->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2024.2.0)

Requirement already satisfied: intel-cmplr-lib-ur==2024.2.0 in /usr/local/lib/python3.10/dist-packages (from intel-openmp>=2024->mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2024.2.0)

Downloading kagglehub-0.3.12-py3-none-any.whl (67 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 68.0/68.0 kB 2.8 MB/s eta 0:00:00

Installing collected packages: kagglehub

Attempting uninstall: kagglehub

Found existing installation: kagglehub 0.3.9

Uninstalling kagglehub-0.3.9:

Successfully uninstalled kagglehub-0.3.9

Successfully installed kagglehub-0.3.12

!pip install daskRequirement already satisfied: dask in /usr/local/lib/python3.10/dist-packages (2024.12.1)

Requirement already satisfied: click>=8.1 in /usr/local/lib/python3.10/dist-packages (from dask) (8.1.7)

Requirement already satisfied: cloudpickle>=3.0.0 in /usr/local/lib/python3.10/dist-packages (from dask) (3.1.0)

Requirement already satisfied: fsspec>=2021.09.0 in /usr/local/lib/python3.10/dist-packages (from dask) (2024.12.0)

Requirement already satisfied: packaging>=20.0 in /usr/local/lib/python3.10/dist-packages (from dask) (24.2)

Requirement already satisfied: partd>=1.4.0 in /usr/local/lib/python3.10/dist-packages (from dask) (1.4.2)

Requirement already satisfied: pyyaml>=5.3.1 in /usr/local/lib/python3.10/dist-packages (from dask) (6.0.2)

Requirement already satisfied: toolz>=0.10.0 in /usr/local/lib/python3.10/dist-packages (from dask) (0.12.1)

Requirement already satisfied: importlib_metadata>=4.13.0 in /usr/local/lib/python3.10/dist-packages (from dask) (8.5.0)

Requirement already satisfied: zipp>=3.20 in /usr/local/lib/python3.10/dist-packages (from importlib_metadata>=4.13.0->dask) (3.21.0)

Requirement already satisfied: locket in /usr/local/lib/python3.10/dist-packages (from partd>=1.4.0->dask) (1.0.0)

import kagglehub

kagglehub.login()Loading...

# Reinstall just to be sure

!pip install --upgrade kagglehub[pandas-datasets]

!pip install kagglehub[hf-datasets]

!pip install kagglehub[polars-datasets]

import kagglehub

from kagglehub import KaggleDatasetAdapter

# Load the dataset using the PANDAS adapter

df = kagglehub.load_dataset(

KaggleDatasetAdapter.PANDAS,

"rajeshkumarkarra/fraudTest",

"fraudTest.csv"

)

# Preview

df.head()

Loading...

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import seaborn as sns

import dask.dataframe as dd

import dask.array as da

import dask.bag as dbCollective Inspection¶

df.columnsIndex(['Unnamed: 0', 'trans_date_trans_time', 'cc_num', 'merchant', 'category',

'amt', 'first', 'last', 'gender', 'street', 'city', 'state', 'zip',

'lat', 'long', 'city_pop', 'job', 'dob', 'trans_num', 'unix_time',

'merch_lat', 'merch_long', 'is_fraud'],

dtype='object')df.shape(555719, 23)df.info()<class 'pandas.core.frame.DataFrame'>

RangeIndex: 555719 entries, 0 to 555718

Data columns (total 23 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Unnamed: 0 555719 non-null int64

1 trans_date_trans_time 555719 non-null object

2 cc_num 555719 non-null float64

3 merchant 555719 non-null object

4 category 555719 non-null object

5 amt 555719 non-null float64

6 first 555719 non-null object

7 last 555719 non-null object

8 gender 555719 non-null object

9 street 555719 non-null object

10 city 555719 non-null object

11 state 555719 non-null object

12 zip 555719 non-null int64

13 lat 555719 non-null float64

14 long 555719 non-null float64

15 city_pop 555719 non-null int64

16 job 555719 non-null object

17 dob 555719 non-null object

18 trans_num 555719 non-null object

19 unix_time 555719 non-null int64

20 merch_lat 555719 non-null float64

21 merch_long 555719 non-null float64

22 is_fraud 555719 non-null int64

dtypes: float64(6), int64(5), object(12)

memory usage: 97.5+ MB

print(type(df))<class 'pandas.core.frame.DataFrame'>

df.duplicated().sum()0df.isnull().sum()Unnamed: 0 0

trans_date_trans_time 0

cc_num 0

merchant 0

category 0

amt 0

first 0

last 0

gender 0

street 0

city 0

state 0

zip 0

lat 0

long 0

city_pop 0

job 0

dob 0

trans_num 0

unix_time 0

merch_lat 0

merch_long 0

is_fraud 0

dtype: int64df.memory_usage().sum()102252424df.describe()Loading...

df.describe(include=object)Loading...

num_df = df.select_dtypes(include='number')

num_dfLoading...

num_df.corr()Loading...

sns.heatmap(num_df.corr(), annot=True)

plt.figure(figsize=(20, 20))

<Figure size 2000x2000 with 0 Axes>Individual Inspection¶

cat_df = df.select_dtypes(include='object')

cat_dfLoading...

cat_df.columnsIndex(['trans_date_trans_time', 'merchant', 'category', 'first', 'last',

'gender', 'street', 'city', 'state', 'job', 'dob', 'trans_num'],

dtype='object')cat_df.nunique()trans_date_trans_time 226976

merchant 693

category 14

first 341

last 471

gender 2

street 924

city 849

state 50

job 478

dob 910

trans_num 555719

dtype: int64cat_df['category'].unique()array(['personal_care', 'health_fitness', 'misc_pos', 'travel',

'kids_pets', 'shopping_pos', 'food_dining', 'home',

'entertainment', 'shopping_net', 'misc_net', 'grocery_pos',

'gas_transport', 'grocery_net'], dtype=object)cat_df['category'].value_counts()category

gas_transport 56370

grocery_pos 52553

home 52345

shopping_pos 49791

kids_pets 48692

shopping_net 41779

entertainment 40104

personal_care 39327

food_dining 39268

health_fitness 36674

misc_pos 34574

misc_net 27367

grocery_net 19426

travel 17449

Name: count, dtype: int64cat_distribution = cat_df['category'].value_counts(normalize=True)

cat_distributioncategory

gas_transport 0.101436

grocery_pos 0.094568

home 0.094193

shopping_pos 0.089597

kids_pets 0.087620

shopping_net 0.075180

entertainment 0.072166

personal_care 0.070768

food_dining 0.070662

health_fitness 0.065994

misc_pos 0.062215

misc_net 0.049246

grocery_net 0.034957

travel 0.031399

Name: proportion, dtype: float64avg_amt_df_by_cat = df.groupby('category')['amt'].mean().sort_values(ascending=False)

avg_amt_df_by_catcategory

grocery_pos 115.885327

travel 112.389683

shopping_net 83.481653

misc_net 78.600237

shopping_pos 76.862457

entertainment 63.984840

gas_transport 63.577001

misc_pos 62.182246

home 57.995413

kids_pets 57.506913

health_fitness 53.867432

grocery_net 53.731667

food_dining 50.777938

personal_care 48.233021

Name: amt, dtype: float64!pip install kagglehub

!pip install --upgrade kagglehub[pandas-datasets]

!pip install kagglehub[hf-datasets]

!pip install kagglehub[polars-datasets]Requirement already satisfied: kagglehub in /usr/local/lib/python3.10/dist-packages (0.3.12)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from kagglehub) (24.2)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from kagglehub) (6.0.2)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from kagglehub) (2.32.3)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from kagglehub) (4.67.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub) (2025.1.31)

Requirement already satisfied: kagglehub[pandas-datasets] in /usr/local/lib/python3.10/dist-packages (0.3.12)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (24.2)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (6.0.2)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (2.32.3)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (4.67.1)

Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from kagglehub[pandas-datasets]) (2.2.3)

Requirement already satisfied: numpy>=1.22.4 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (1.26.4)

Requirement already satisfied: python-dateutil>=2.8.2 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (2025.1)

Requirement already satisfied: tzdata>=2022.7 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[pandas-datasets]) (2025.1)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[pandas-datasets]) (2025.1.31)

Requirement already satisfied: mkl_fft in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (1.3.8)

Requirement already satisfied: mkl_random in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (1.2.4)

Requirement already satisfied: mkl_umath in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (0.1.1)

Requirement already satisfied: mkl in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2025.0.1)

Requirement already satisfied: tbb4py in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2022.0.0)

Requirement already satisfied: mkl-service in /usr/local/lib/python3.10/dist-packages (from numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2.4.1)

Requirement already satisfied: six>=1.5 in /usr/local/lib/python3.10/dist-packages (from python-dateutil>=2.8.2->pandas->kagglehub[pandas-datasets]) (1.17.0)

Requirement already satisfied: intel-openmp>=2024 in /usr/local/lib/python3.10/dist-packages (from mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2024.2.0)

Requirement already satisfied: tbb==2022.* in /usr/local/lib/python3.10/dist-packages (from mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2022.0.0)

Requirement already satisfied: tcmlib==1.* in /usr/local/lib/python3.10/dist-packages (from tbb==2022.*->mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (1.2.0)

Requirement already satisfied: intel-cmplr-lib-rt in /usr/local/lib/python3.10/dist-packages (from mkl_umath->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2024.2.0)

Requirement already satisfied: intel-cmplr-lib-ur==2024.2.0 in /usr/local/lib/python3.10/dist-packages (from intel-openmp>=2024->mkl->numpy>=1.22.4->pandas->kagglehub[pandas-datasets]) (2024.2.0)

Requirement already satisfied: kagglehub[hf-datasets] in /usr/local/lib/python3.10/dist-packages (0.3.12)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from kagglehub[hf-datasets]) (24.2)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from kagglehub[hf-datasets]) (6.0.2)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from kagglehub[hf-datasets]) (2.32.3)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from kagglehub[hf-datasets]) (4.67.1)

Requirement already satisfied: datasets in /usr/local/lib/python3.10/dist-packages (from kagglehub[hf-datasets]) (3.3.1)

Requirement already satisfied: pandas in /usr/local/lib/python3.10/dist-packages (from kagglehub[hf-datasets]) (2.2.3)

Requirement already satisfied: filelock in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (3.17.0)

Requirement already satisfied: numpy>=1.17 in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (1.26.4)

Requirement already satisfied: pyarrow>=15.0.0 in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (19.0.1)

Requirement already satisfied: dill<0.3.9,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (0.3.8)

Requirement already satisfied: xxhash in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (3.5.0)

Requirement already satisfied: multiprocess<0.70.17 in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (0.70.16)

Requirement already satisfied: fsspec<=2024.12.0,>=2023.1.0 in /usr/local/lib/python3.10/dist-packages (from fsspec[http]<=2024.12.0,>=2023.1.0->datasets->kagglehub[hf-datasets]) (2024.12.0)

Requirement already satisfied: aiohttp in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (3.11.12)

Requirement already satisfied: huggingface-hub>=0.24.0 in /usr/local/lib/python3.10/dist-packages (from datasets->kagglehub[hf-datasets]) (0.29.0)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[hf-datasets]) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[hf-datasets]) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[hf-datasets]) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[hf-datasets]) (2025.1.31)

Requirement already satisfied: python-dateutil>=2.8.2 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[hf-datasets]) (2.9.0.post0)

Requirement already satisfied: pytz>=2020.1 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[hf-datasets]) (2025.1)

Requirement already satisfied: tzdata>=2022.7 in /usr/local/lib/python3.10/dist-packages (from pandas->kagglehub[hf-datasets]) (2025.1)

Requirement already satisfied: aiohappyeyeballs>=2.3.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (2.4.6)

Requirement already satisfied: aiosignal>=1.1.2 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (1.3.2)

Requirement already satisfied: async-timeout<6.0,>=4.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (5.0.1)

Requirement already satisfied: attrs>=17.3.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (25.1.0)

Requirement already satisfied: frozenlist>=1.1.1 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (1.5.0)

Requirement already satisfied: multidict<7.0,>=4.5 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (6.1.0)

Requirement already satisfied: propcache>=0.2.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (0.2.1)

Requirement already satisfied: yarl<2.0,>=1.17.0 in /usr/local/lib/python3.10/dist-packages (from aiohttp->datasets->kagglehub[hf-datasets]) (1.18.3)

Requirement already satisfied: typing-extensions>=3.7.4.3 in /usr/local/lib/python3.10/dist-packages (from huggingface-hub>=0.24.0->datasets->kagglehub[hf-datasets]) (4.12.2)

Requirement already satisfied: mkl_fft in /usr/local/lib/python3.10/dist-packages (from numpy>=1.17->datasets->kagglehub[hf-datasets]) (1.3.8)

Requirement already satisfied: mkl_random in /usr/local/lib/python3.10/dist-packages (from numpy>=1.17->datasets->kagglehub[hf-datasets]) (1.2.4)

Requirement already satisfied: mkl_umath in /usr/local/lib/python3.10/dist-packages (from numpy>=1.17->datasets->kagglehub[hf-datasets]) (0.1.1)

Requirement already satisfied: mkl in /usr/local/lib/python3.10/dist-packages (from numpy>=1.17->datasets->kagglehub[hf-datasets]) (2025.0.1)

Requirement already satisfied: tbb4py in /usr/local/lib/python3.10/dist-packages (from numpy>=1.17->datasets->kagglehub[hf-datasets]) (2022.0.0)

Requirement already satisfied: mkl-service in /usr/local/lib/python3.10/dist-packages (from numpy>=1.17->datasets->kagglehub[hf-datasets]) (2.4.1)

Requirement already satisfied: six>=1.5 in /usr/local/lib/python3.10/dist-packages (from python-dateutil>=2.8.2->pandas->kagglehub[hf-datasets]) (1.17.0)

Requirement already satisfied: intel-openmp>=2024 in /usr/local/lib/python3.10/dist-packages (from mkl->numpy>=1.17->datasets->kagglehub[hf-datasets]) (2024.2.0)

Requirement already satisfied: tbb==2022.* in /usr/local/lib/python3.10/dist-packages (from mkl->numpy>=1.17->datasets->kagglehub[hf-datasets]) (2022.0.0)

Requirement already satisfied: tcmlib==1.* in /usr/local/lib/python3.10/dist-packages (from tbb==2022.*->mkl->numpy>=1.17->datasets->kagglehub[hf-datasets]) (1.2.0)

Requirement already satisfied: intel-cmplr-lib-rt in /usr/local/lib/python3.10/dist-packages (from mkl_umath->numpy>=1.17->datasets->kagglehub[hf-datasets]) (2024.2.0)

Requirement already satisfied: intel-cmplr-lib-ur==2024.2.0 in /usr/local/lib/python3.10/dist-packages (from intel-openmp>=2024->mkl->numpy>=1.17->datasets->kagglehub[hf-datasets]) (2024.2.0)

Requirement already satisfied: kagglehub[polars-datasets] in /usr/local/lib/python3.10/dist-packages (0.3.12)

Requirement already satisfied: packaging in /usr/local/lib/python3.10/dist-packages (from kagglehub[polars-datasets]) (24.2)

Requirement already satisfied: pyyaml in /usr/local/lib/python3.10/dist-packages (from kagglehub[polars-datasets]) (6.0.2)

Requirement already satisfied: requests in /usr/local/lib/python3.10/dist-packages (from kagglehub[polars-datasets]) (2.32.3)

Requirement already satisfied: tqdm in /usr/local/lib/python3.10/dist-packages (from kagglehub[polars-datasets]) (4.67.1)

Requirement already satisfied: polars in /usr/local/lib/python3.10/dist-packages (from kagglehub[polars-datasets]) (1.9.0)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[polars-datasets]) (3.4.1)

Requirement already satisfied: idna<4,>=2.5 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[polars-datasets]) (3.10)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[polars-datasets]) (2.3.0)

Requirement already satisfied: certifi>=2017.4.17 in /usr/local/lib/python3.10/dist-packages (from requests->kagglehub[polars-datasets]) (2025.1.31)

import kagglehub

kagglehub.login()Loading...

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import kagglehub

from kagglehub import KaggleDatasetAdapter

# Load the dataset using the PANDAS adapter

df = kagglehub.load_dataset(

KaggleDatasetAdapter.PANDAS,

"rajeshkumarkarra/fraudTest",

"fraudTest.csv"

)

# Preview

df.head()

Loading...

# Shape and info

print(df.shape) # Rows and columns

print()

print(df.info()) # column types and non-null counts(555719, 23)

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 555719 entries, 0 to 555718

Data columns (total 23 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 Unnamed: 0 555719 non-null int64

1 trans_date_trans_time 555719 non-null object

2 cc_num 555719 non-null float64

3 merchant 555719 non-null object

4 category 555719 non-null object

5 amt 555719 non-null float64

6 first 555719 non-null object

7 last 555719 non-null object

8 gender 555719 non-null object

9 street 555719 non-null object

10 city 555719 non-null object

11 state 555719 non-null object

12 zip 555719 non-null int64

13 lat 555719 non-null float64

14 long 555719 non-null float64

15 city_pop 555719 non-null int64

16 job 555719 non-null object

17 dob 555719 non-null object

18 trans_num 555719 non-null object

19 unix_time 555719 non-null int64

20 merch_lat 555719 non-null float64

21 merch_long 555719 non-null float64

22 is_fraud 555719 non-null int64

dtypes: float64(6), int64(5), object(12)

memory usage: 97.5+ MB

None

# Summary

df.describe(include='all') # numerical and categorical dataLoading...

# Check for missing values

df.isnull().sum()Unnamed: 0 0

trans_date_trans_time 0

cc_num 0

merchant 0

category 0

amt 0

first 0

last 0

gender 0

street 0

city 0

state 0

zip 0

lat 0

long 0

city_pop 0

job 0

dob 0

trans_num 0

unix_time 0

merch_lat 0

merch_long 0

is_fraud 0

dtype: int64# Correlation Matrix

sns.heatmap(df.corr(numeric_only= True), annot=True)

plt.show()

Individual Inspection¶

# inspect specific columns

df['category'].value_counts()

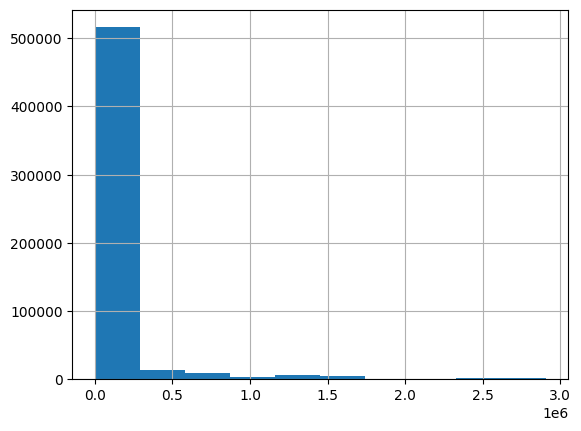

df['city_pop'].hist()<Axes: >